Table of Contents

- 18.1 MySQL Cluster Overview

- 18.1.1 MySQL Cluster Core Concepts

- 18.1.2 MySQL Cluster Nodes, Node Groups, Replicas, and Partitions

- 18.1.3 MySQL Cluster Hardware, Software, and Networking Requirements

- 18.1.4 MySQL Cluster Development History

- 18.1.5 MySQL Server Using InnoDB Compared with MySQL Cluster

- 18.1.6 Known Limitations of MySQL Cluster

- 18.2 MySQL Cluster Installation and Upgrades

- 18.2.1 Installing MySQL Cluster on Linux

- 18.2.2 Installing MySQL Cluster on Windows

- 18.2.3 Initial Configuration of MySQL Cluster

- 18.2.4 Initial Startup of MySQL Cluster

- 18.2.5 MySQL Cluster Example with Tables and Data

- 18.2.6 Safe Shutdown and Restart of MySQL Cluster

- 18.2.7 Upgrading and Downgrading MySQL Cluster NDB 7.2

- 18.3 Configuration of MySQL Cluster

- 18.4 MySQL Cluster Programs

- 18.4.1 ndbd — The MySQL Cluster Data Node Daemon

- 18.4.2 ndbinfo_select_all — Select From ndbinfo Tables

- 18.4.3 ndbmtd — The MySQL Cluster Data Node Daemon (Multi-Threaded)

- 18.4.4 ndb_mgmd — The MySQL Cluster Management Server Daemon

- 18.4.5 ndb_mgm — The MySQL Cluster Management Client

- 18.4.6 ndb_blob_tool — Check and Repair BLOB and TEXT columns of MySQL Cluster Tables

- 18.4.7 ndb_config — Extract MySQL Cluster Configuration Information

- 18.4.8 ndb_cpcd — Automate Testing for NDB Development

- 18.4.9 ndb_delete_all — Delete All Rows from an NDB Table

- 18.4.10 ndb_desc — Describe NDB Tables

- 18.4.11 ndb_drop_index — Drop Index from an NDB Table

- 18.4.12 ndb_drop_table — Drop an NDB Table

- 18.4.13 ndb_error_reporter — NDB Error-Reporting Utility

- 18.4.14 ndb_index_stat — NDB Index Statistics Utility

- 18.4.15 ndb_print_backup_file — Print NDB Backup File Contents

- 18.4.16 ndb_print_file — Print NDB Disk Data File Contents

- 18.4.17 ndb_print_schema_file — Print NDB Schema File Contents

- 18.4.18 ndb_print_sys_file — Print NDB System File Contents

- 18.4.19 ndbd_redo_log_reader — Check and Print Content of Cluster Redo Log

- 18.4.20 ndb_restore — Restore a MySQL Cluster Backup

- 18.4.21 ndb_select_all — Print Rows from an NDB Table

- 18.4.22 ndb_select_count — Print Row Counts for NDB Tables

- 18.4.23 ndb_show_tables — Display List of NDB Tables

- 18.4.24 ndb_size.pl — NDBCLUSTER Size Requirement Estimator

- 18.4.25 ndb_waiter — Wait for MySQL Cluster to Reach a Given Status

- 18.4.26 Options Common to MySQL Cluster Programs — Options Common to MySQL Cluster Programs

- 18.5 Management of MySQL Cluster

- 18.5.1 Summary of MySQL Cluster Start Phases

- 18.5.2 Commands in the MySQL Cluster Management Client

- 18.5.3 Online Backup of MySQL Cluster

- 18.5.4 MySQL Server Usage for MySQL Cluster

- 18.5.5 Performing a Rolling Restart of a MySQL Cluster

- 18.5.6 Event Reports Generated in MySQL Cluster

- 18.5.7 MySQL Cluster Log Messages

- 18.5.8 MySQL Cluster Single User Mode

- 18.5.9 Quick Reference: MySQL Cluster SQL Statements

- 18.5.10 The ndbinfo MySQL Cluster Information Database

- 18.5.11 MySQL Cluster Security Issues

- 18.5.12 MySQL Cluster Disk Data Tables

- 18.5.13 Adding MySQL Cluster Data Nodes Online

- 18.5.14 Distributed MySQL Privileges for MySQL Cluster

- 18.5.15 NDB API Statistics Counters and Variables

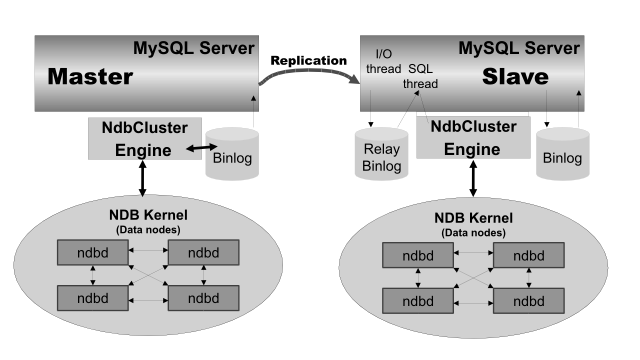

- 18.6 MySQL Cluster Replication

- 18.6.1 MySQL Cluster Replication: Abbreviations and Symbols

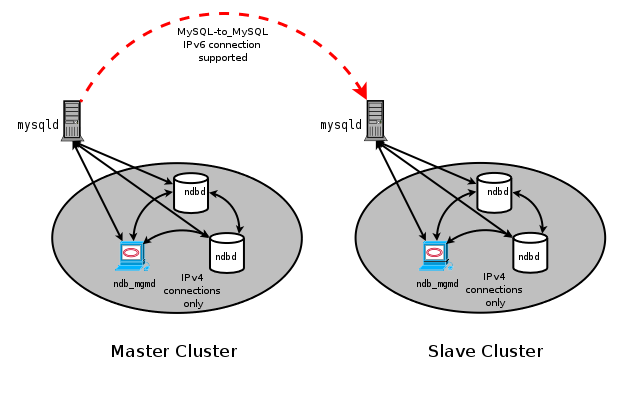

- 18.6.2 General Requirements for MySQL Cluster Replication

- 18.6.3 Known Issues in MySQL Cluster Replication

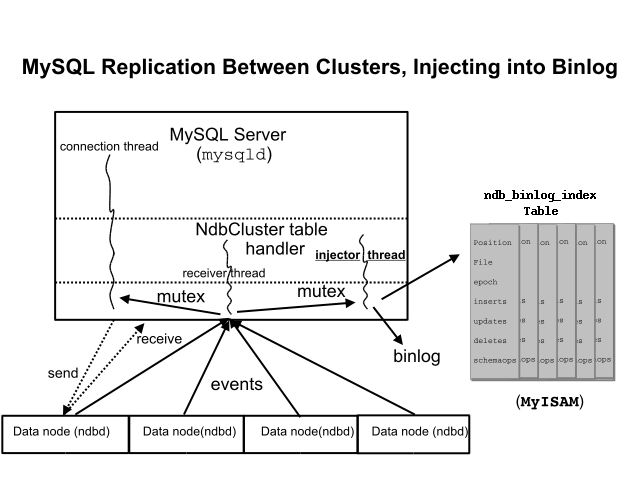

- 18.6.4 MySQL Cluster Replication Schema and Tables

- 18.6.5 Preparing the MySQL Cluster for Replication

- 18.6.6 Starting MySQL Cluster Replication (Single Replication Channel)

- 18.6.7 Using Two Replication Channels for MySQL Cluster Replication

- 18.6.8 Implementing Failover with MySQL Cluster Replication

- 18.6.9 MySQL Cluster Backups With MySQL Cluster Replication

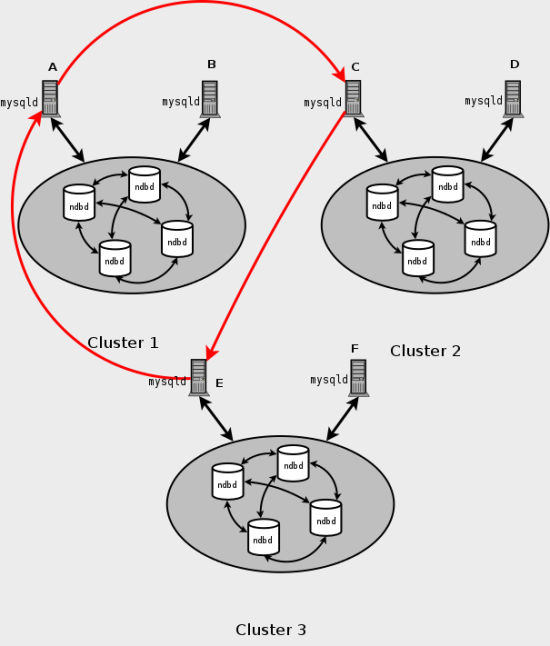

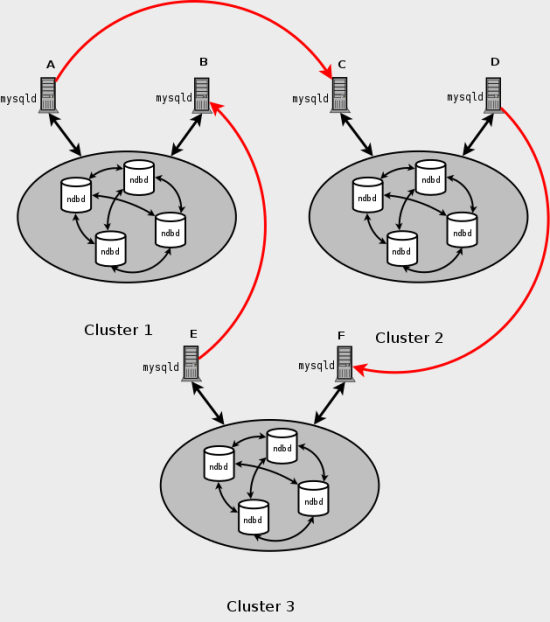

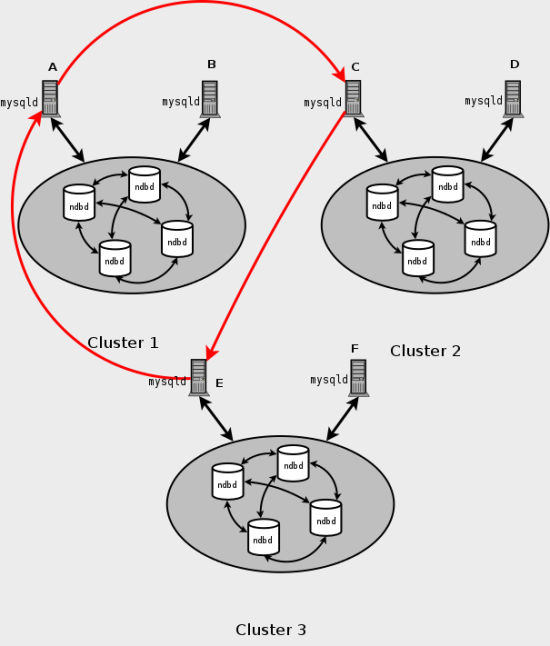

- 18.6.10 MySQL Cluster Replication: Multi-Master and Circular Replication

- 18.6.11 MySQL Cluster Replication Conflict Resolution

- 18.7 MySQL Cluster Release Notes

This chapter contains information about MySQL

Cluster, which is a high-availability, high-redundancy

version of MySQL adapted for the distributed computing environment.

Recent releases of MySQL Cluster use version 7 of the

NDBCLUSTER storage engine (also known

as NDB) to enable running several

computers with MySQL servers and other software in a cluster; the

latest releases available for production use incorporate

NDB version 7.2.

Support for the NDBCLUSTER storage

engine is not included in the standard MySQL Server 5.5 binaries

built by Oracle. Instead, users of MySQL Cluster binaries from

Oracle should upgrade to the most recent binary release of MySQL

Cluster for supported platforms—these include RPMs that should

work with most Linux distributions. MySQL Cluster users who build

from source should use the sources provided for MySQL Cluster.

(Locations where the sources can be obtained are listed later in

this section.)

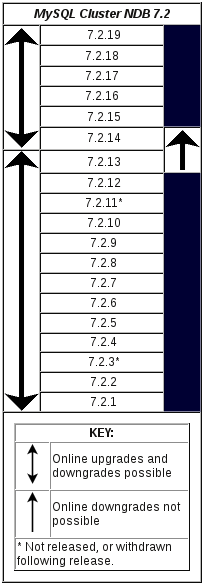

This chapter contains information about MySQL Cluster NDB 7.2 releases through 5.5.43-ndb-7.2.21. Currently, the MySQL Cluster NDB 7.2 release series is Generally Available (GA), as is MySQL Cluster NDB 7.1. MySQL Cluster NDB 7.0 and MySQL Cluster NDB 6.3 are previous GA release series; although they are still supported, we recommend that new deployments use MySQL Cluster NDB 7.2. For information about MySQL Cluster NDB 7.1, MySQL Cluster NDB 7.0, and previous versions of MySQL Cluster, see MySQL Cluster NDB 6.1 - 7.1, in the MySQL 5.1 Manual.

Release notes for the changes in each release of MySQL Cluster are located at MySQL Cluster 7.2 Release Notes.

Supported Platforms. MySQL Cluster is currently available and supported on a number of platforms. For exact levels of support available for on specific combinations of operating system versions, operating system distributions, and hardware platforms, please refer to http://www.mysql.com/support/supportedplatforms/cluster.html.

Availability. MySQL Cluster binary and source packages are available for supported platforms from http://dev.mysql.com/downloads/cluster/.

MySQL Cluster release numbers.

MySQL Cluster follows a somewhat different release pattern from

the mainline MySQL Server 5.5 series of releases. In this

Manual and other MySQL documentation, we

identify these and later MySQL Cluster releases employing a

version number that begins with “NDB”. This version

number is that of the NDBCLUSTER

storage engine used in the release, and not of the MySQL server

version on which the MySQL Cluster release is based.

Version strings used in MySQL Cluster software. The version string displayed by MySQL Cluster programs uses this format:

mysql-mysql_server_version-ndb-ndb_engine_version

mysql_server_version represents the

version of the MySQL Server on which the MySQL Cluster release is

based. For all MySQL Cluster NDB 6.x and 7.x releases, this is

“5.1”. ndb_engine_version is

the version of the NDB storage engine

used by this release of the MySQL Cluster software. You can see this

format used in the mysql client, as shown here:

shell>mysqlWelcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 2 Server version: 5.5.43-ndb-7.2.21 Source distribution Type 'help;' or '\h' for help. Type '\c' to clear the buffer. mysql>SELECT VERSION()\G*************************** 1. row *************************** VERSION(): 5.5.43-ndb-7.2.21 1 row in set (0.00 sec)

This version string is also displayed in the output of the

SHOW command in the ndb_mgm

client:

ndb_mgm> SHOW

Connected to Management Server at: localhost:1186

Cluster Configuration

---------------------

[ndbd(NDB)] 2 node(s)

id=1 @10.0.10.6 (5.5.43-ndb-7.2.21, Nodegroup: 0, *)

id=2 @10.0.10.8 (5.5.43-ndb-7.2.21, Nodegroup: 0)

[ndb_mgmd(MGM)] 1 node(s)

id=3 @10.0.10.2 (5.5.43-ndb-7.2.21)

[mysqld(API)] 2 node(s)

id=4 @10.0.10.10 (5.5.43-ndb-7.2.21)

id=5 (not connected, accepting connect from any host)

The version string identifies the mainline MySQL version from which

the MySQL Cluster release was branched and the version of the

NDBCLUSTER storage engine used. For

example, the full version string for MySQL Cluster NDB 7.2.4 (the

first MySQL Cluster production release based on MySQL Server 5.5) is

mysql-5.5.19-ndb-7.2.4. From this we can

determine the following:

Since the portion of the version string preceding “

-ndb-” is the base MySQL Server version, this means that MySQL Cluster NDB 7.2.4 derives from the MySQL 5.5.19, and contains all feature enhancements and bugfixes from MySQL 5.5 up to and including MySQL 5.5.19.Since the portion of the version string following “

-ndb-” represents the version number of theNDB(orNDBCLUSTER) storage engine, MySQL Cluster NDB 7.2.4 uses version 7.2.4 of theNDBCLUSTERstorage engine.

New MySQL Cluster releases are numbered according to updates in the

NDB storage engine, and do not necessarily

correspond in a one-to-one fashion with mainline MySQL Server

releases. For example, MySQL Cluster NDB 7.2.4 (as previously noted)

is based on MySQL 5.5.19, while MySQL Cluster NDB 7.2.0 was based on

MySQL 5.1.51 (version string:

mysql-5.1.51-ndb-7.2.0).

Compatibility with standard MySQL 5.5 releases.

While many standard MySQL schemas and applications can work using

MySQL Cluster, it is also true that unmodified applications and

database schemas may be slightly incompatible or have suboptimal

performance when run using MySQL Cluster (see

Section 18.1.6, “Known Limitations of MySQL Cluster”). Most of these issues

can be overcome, but this also means that you are very unlikely to

be able to switch an existing application datastore—that

currently uses, for example, MyISAM

or InnoDB—to use the

NDB storage engine without allowing

for the possibility of changes in schemas, queries, and

applications. In addition, the MySQL Server and MySQL Cluster

codebases diverge considerably, so that the standard

mysqld cannot function as a drop-in replacement

for the version of mysqld supplied with MySQL

Cluster.

MySQL Cluster development source trees. MySQL Cluster development trees can also be accessed from https://github.com/mysql/mysql-server.

The MySQL Cluster development sources maintained at https://github.com/mysql/mysql-server are licensed under the GPL. For information about obtaining MySQL sources using Bazaar and building them yourself, see Section 2.9.3, “Installing MySQL Using a Development Source Tree”.

As with MySQL Server 5.5, MySQL Cluster NDB 7.2 is built using CMake.

Currently, MySQL Cluster NDB 7.0, MySQL Cluster NDB 7.1, and MySQL Cluster NDB 7.2 releases are all Generally Available (GA), although we recommend that new deployments use MySQL Cluster NDB 7.2. MySQL Cluster NDB 6.1, MySQL Cluster NDB 6.2, and MySQL Cluster NDB 6.3, are no longer in active development. For an overview of major features added in MySQL Cluster NDB 7.2, see Section 18.1.4, “MySQL Cluster Development History”. For an overview of major features added in past MySQL Cluster releases through MySQL Cluster NDB 7.1, see MySQL Cluster Development History.

This chapter represents a work in progress, and its contents are subject to revision as MySQL Cluster continues to evolve. Additional information regarding MySQL Cluster can be found on the MySQL Web site at http://www.mysql.com/products/cluster/.

Additional Resources. More information about MySQL Cluster can be found in the following places:

For answers to some commonly asked questions about MySQL Cluster, see Section A.10, “MySQL FAQ: MySQL 5.5 and MySQL Cluster”.

The MySQL Cluster mailing list: http://lists.mysql.com/cluster.

The MySQL Cluster Forum: http://forums.mysql.com/list.php?25.

Many MySQL Cluster users and developers blog about their experiences with MySQL Cluster, and make feeds of these available through PlanetMySQL.

- 18.1.1 MySQL Cluster Core Concepts

- 18.1.2 MySQL Cluster Nodes, Node Groups, Replicas, and Partitions

- 18.1.3 MySQL Cluster Hardware, Software, and Networking Requirements

- 18.1.4 MySQL Cluster Development History

- 18.1.5 MySQL Server Using InnoDB Compared with MySQL Cluster

- 18.1.6 Known Limitations of MySQL Cluster

MySQL Cluster is a technology that enables clustering of in-memory databases in a shared-nothing system. The shared-nothing architecture enables the system to work with very inexpensive hardware, and with a minimum of specific requirements for hardware or software.

MySQL Cluster is designed not to have any single point of failure. In a shared-nothing system, each component is expected to have its own memory and disk, and the use of shared storage mechanisms such as network shares, network file systems, and SANs is not recommended or supported.

MySQL Cluster integrates the standard MySQL server with an in-memory

clustered storage engine called NDB

(which stands for “Network

DataBase”). In our

documentation, the term NDB refers to

the part of the setup that is specific to the storage engine,

whereas “MySQL Cluster” refers to the combination of

one or more MySQL servers with the NDB

storage engine.

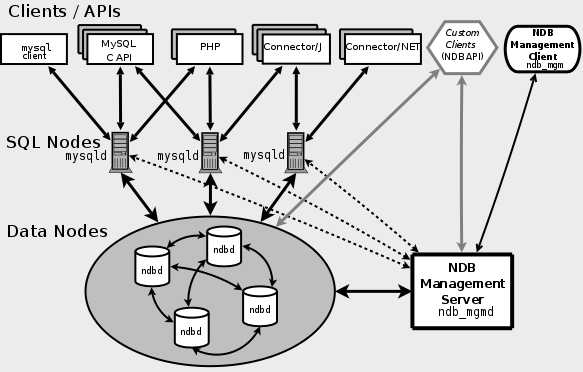

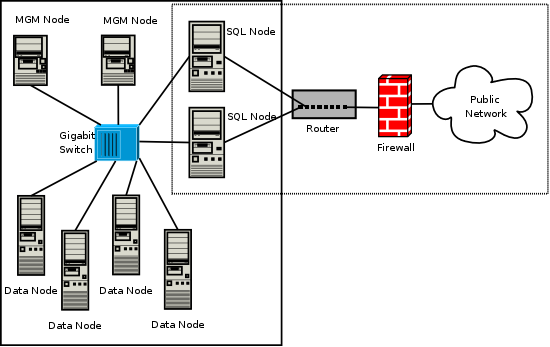

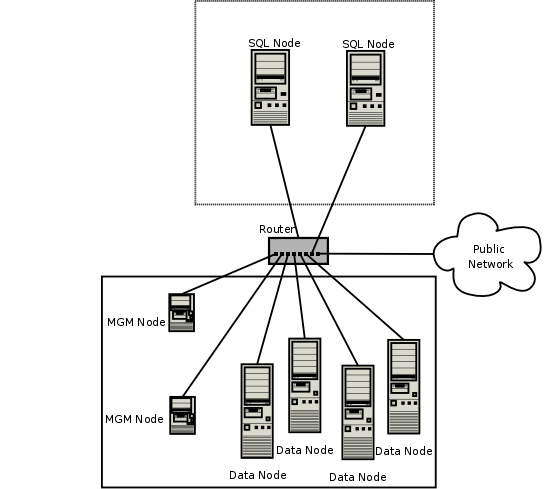

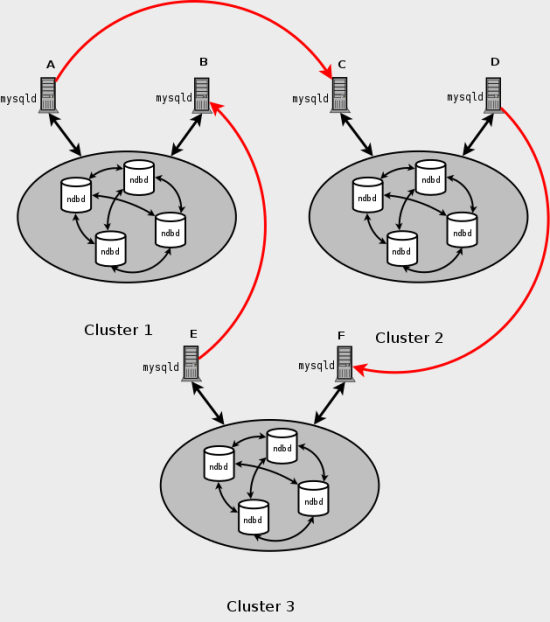

A MySQL Cluster consists of a set of computers, known as hosts, each running one or more processes. These processes, known as nodes, may include MySQL servers (for access to NDB data), data nodes (for storage of the data), one or more management servers, and possibly other specialized data access programs. The relationship of these components in a MySQL Cluster is shown here:

All these programs work together to form a MySQL Cluster (see

Section 18.4, “MySQL Cluster Programs”. When data is stored by the

NDB storage engine, the tables (and

table data) are stored in the data nodes. Such tables are directly

accessible from all other MySQL servers (SQL nodes) in the cluster.

Thus, in a payroll application storing data in a cluster, if one

application updates the salary of an employee, all other MySQL

servers that query this data can see this change immediately.

Although a MySQL Cluster SQL node uses the mysqld server daemon, it differs in a number of critical respects from the mysqld binary supplied with the MySQL 5.5 distributions, and the two versions of mysqld are not interchangeable.

In addition, a MySQL server that is not connected to a MySQL Cluster

cannot use the NDB storage engine and

cannot access any MySQL Cluster data.

The data stored in the data nodes for MySQL Cluster can be mirrored; the cluster can handle failures of individual data nodes with no other impact than that a small number of transactions are aborted due to losing the transaction state. Because transactional applications are expected to handle transaction failure, this should not be a source of problems.

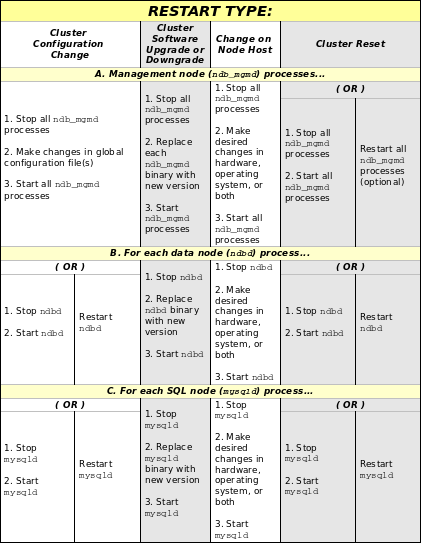

Individual nodes can be stopped and restarted, and can then rejoin the system (cluster). Rolling restarts (in which all nodes are restarted in turn) are used in making configuration changes and software upgrades (see Section 18.5.5, “Performing a Rolling Restart of a MySQL Cluster”). Rolling restarts are also used as part of the process of adding new data nodes online (see Section 18.5.13, “Adding MySQL Cluster Data Nodes Online”). For more information about data nodes, how they are organized in a MySQL Cluster, and how they handle and store MySQL Cluster data, see Section 18.1.2, “MySQL Cluster Nodes, Node Groups, Replicas, and Partitions”.

Backing up and restoring MySQL Cluster databases can be done using

the NDB-native functionality found in the MySQL

Cluster management client and the ndb_restore

program included in the MySQL Cluster distribution. For more

information, see Section 18.5.3, “Online Backup of MySQL Cluster”, and

Section 18.4.20, “ndb_restore — Restore a MySQL Cluster Backup”. You can also

use the standard MySQL functionality provided for this purpose in

mysqldump and the MySQL server. See

Section 4.5.4, “mysqldump — A Database Backup Program”, for more information.

MySQL Cluster nodes can use a number of different transport mechanisms for inter-node communications, including TCP/IP using standard 100 Mbps or faster Ethernet hardware. It is also possible to use the high-speed Scalable Coherent Interface (SCI) protocol with MySQL Cluster, although this is not required to use MySQL Cluster. SCI requires special hardware and software; see Section 18.3.5, “Using High-Speed Interconnects with MySQL Cluster”, for more about SCI and using it with MySQL Cluster.

NDBCLUSTER

(also known as NDB) is an in-memory

storage engine offering high-availability and data-persistence

features.

The NDBCLUSTER storage engine can be

configured with a range of failover and load-balancing options,

but it is easiest to start with the storage engine at the cluster

level. MySQL Cluster's NDB storage

engine contains a complete set of data, dependent only on other

data within the cluster itself.

The “Cluster” portion of MySQL Cluster is configured independently of the MySQL servers. In a MySQL Cluster, each part of the cluster is considered to be a node.

In many contexts, the term “node” is used to indicate a computer, but when discussing MySQL Cluster it means a process. It is possible to run multiple nodes on a single computer; for a computer on which one or more cluster nodes are being run we use the term cluster host.

There are three types of cluster nodes, and in a minimal MySQL Cluster configuration, there will be at least three nodes, one of each of these types:

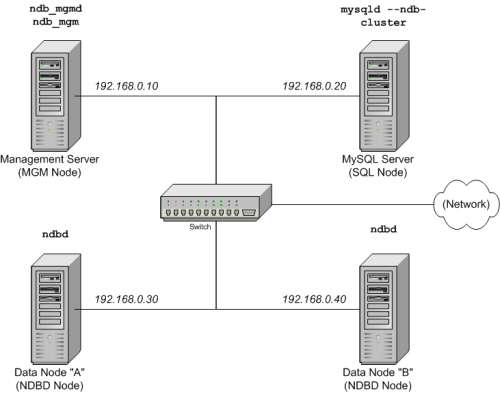

Management node: The role of this type of node is to manage the other nodes within the MySQL Cluster, performing such functions as providing configuration data, starting and stopping nodes, running backup, and so forth. Because this node type manages the configuration of the other nodes, a node of this type should be started first, before any other node. An MGM node is started with the command ndb_mgmd.

Data node: This type of node stores cluster data. There are as many data nodes as there are replicas, times the number of fragments (see Section 18.1.2, “MySQL Cluster Nodes, Node Groups, Replicas, and Partitions”). For example, with two replicas, each having two fragments, you need four data nodes. One replica is sufficient for data storage, but provides no redundancy; therefore, it is recommended to have 2 (or more) replicas to provide redundancy, and thus high availability. A data node is started with the command ndbd (see Section 18.4.1, “ndbd — The MySQL Cluster Data Node Daemon”) or ndbmtd (see Section 18.4.3, “ndbmtd — The MySQL Cluster Data Node Daemon (Multi-Threaded)”).

MySQL Cluster tables are normally stored completely in memory rather than on disk (this is why we refer to MySQL Cluster as an in-memory database). However, some MySQL Cluster data can be stored on disk; see Section 18.5.12, “MySQL Cluster Disk Data Tables”, for more information.

SQL node: This is a node that accesses the cluster data. In the case of MySQL Cluster, an SQL node is a traditional MySQL server that uses the

NDBCLUSTERstorage engine. An SQL node is a mysqld process started with the--ndbclusterand--ndb-connectstringoptions, which are explained elsewhere in this chapter, possibly with additional MySQL server options as well.An SQL node is actually just a specialized type of API node, which designates any application which accesses MySQL Cluster data. Another example of an API node is the ndb_restore utility that is used to restore a cluster backup. It is possible to write such applications using the NDB API. For basic information about the NDB API, see Getting Started with the NDB API.

It is not realistic to expect to employ a three-node setup in a production environment. Such a configuration provides no redundancy; to benefit from MySQL Cluster's high-availability features, you must use multiple data and SQL nodes. The use of multiple management nodes is also highly recommended.

For a brief introduction to the relationships between nodes, node groups, replicas, and partitions in MySQL Cluster, see Section 18.1.2, “MySQL Cluster Nodes, Node Groups, Replicas, and Partitions”.

Configuration of a cluster involves configuring each individual node in the cluster and setting up individual communication links between nodes. MySQL Cluster is currently designed with the intention that data nodes are homogeneous in terms of processor power, memory space, and bandwidth. In addition, to provide a single point of configuration, all configuration data for the cluster as a whole is located in one configuration file.

The management server manages the cluster configuration file and the cluster log. Each node in the cluster retrieves the configuration data from the management server, and so requires a way to determine where the management server resides. When interesting events occur in the data nodes, the nodes transfer information about these events to the management server, which then writes the information to the cluster log.

In addition, there can be any number of cluster client processes

or applications. These include standard MySQL clients,

NDB-specific API programs, and management

clients. These are described in the next few paragraphs.

Standard MySQL clients. MySQL Cluster can be used with existing MySQL applications written in PHP, Perl, C, C++, Java, Python, Ruby, and so on. Such client applications send SQL statements to and receive responses from MySQL servers acting as MySQL Cluster SQL nodes in much the same way that they interact with standalone MySQL servers.

MySQL clients using a MySQL Cluster as a data source can be

modified to take advantage of the ability to connect with multiple

MySQL servers to achieve load balancing and failover. For example,

Java clients using Connector/J 5.0.6 and later can use

jdbc:mysql:loadbalance:// URLs (improved in

Connector/J 5.1.7) to achieve load balancing transparently; for

more information about using Connector/J with MySQL Cluster, see

Using Connector/J with MySQL Cluster.

NDB client programs.

Client programs can be written that access MySQL Cluster data

directly from the NDBCLUSTER storage engine,

bypassing any MySQL Servers that may be connected to the

cluster, using the NDB API, a high-level

C++ API. Such applications may be useful for specialized

purposes where an SQL interface to the data is not needed. For

more information, see The NDB API.

NDB-specific Java applications can also be

written for MySQL Cluster using the MySQL Cluster

Connector for Java. This MySQL Cluster Connector

includes ClusterJ, a high-level database

API similar to object-relational mapping persistence frameworks

such as Hibernate and JPA that connect directly to

NDBCLUSTER, and so does not require access to a

MySQL Server. Support is also provided in MySQL Cluster NDB 7.1

and later for ClusterJPA, an OpenJPA

implementation for MySQL Cluster that leverages the strengths of

ClusterJ and JDBC; ID lookups and other fast operations are

performed using ClusterJ (bypassing the MySQL Server), while more

complex queries that can benefit from MySQL's query optimizer

are sent through the MySQL Server, using JDBC. See

Java and MySQL Cluster, and

The ClusterJ API and Data Object Model, for more

information.

The Memcache API for MySQL Cluster, implemented as the loadable ndbmemcache storage engine for memcached version 1.6 and later, is available beginning with MySQL Cluster NDB 7.2.2. This API can be used to provide a persistent MySQL Cluster data store, accessed using the memcache protocol.

The standard memcached caching engine is included in the MySQL Cluster NDB 7.2 distribution (7.2.2 and later). Each memcached server has direct access to data stored in MySQL Cluster, but is also able to cache data locally and to serve (some) requests from this local cache.

For more information, see ndbmemcache—Memcache API for MySQL Cluster.

Management clients. These clients connect to the management server and provide commands for starting and stopping nodes gracefully, starting and stopping message tracing (debug versions only), showing node versions and status, starting and stopping backups, and so on. An example of this type of program is the ndb_mgm management client supplied with MySQL Cluster (see Section 18.4.5, “ndb_mgm — The MySQL Cluster Management Client”). Such applications can be written using the MGM API, a C-language API that communicates directly with one or more MySQL Cluster management servers. For more information, see The MGM API.

Oracle also makes available MySQL Cluster Manager, which provides an advanced command-line interface simplifying many complex MySQL Cluster management tasks, such restarting a MySQL Cluster with a large number of nodes. The MySQL Cluster Manager client also supports commands for getting and setting the values of most node configuration parameters as well as mysqld server options and variables relating to MySQL Cluster. See MySQL™ Cluster Manager 1.3.5 User Manual, for more information.

Event logs. MySQL Cluster logs events by category (startup, shutdown, errors, checkpoints, and so on), priority, and severity. A complete listing of all reportable events may be found in Section 18.5.6, “Event Reports Generated in MySQL Cluster”. Event logs are of the two types listed here:

Cluster log: Keeps a record of all desired reportable events for the cluster as a whole.

Node log: A separate log which is also kept for each individual node.

Under normal circumstances, it is necessary and sufficient to keep and examine only the cluster log. The node logs need be consulted only for application development and debugging purposes.

Checkpoint.

Generally speaking, when data is saved to disk, it is said that

a checkpoint has been reached. More

specific to MySQL Cluster, a checkpoint is a point in time where

all committed transactions are stored on disk. With regard to

the NDB storage engine, there are

two types of checkpoints which work together to ensure that a

consistent view of the cluster's data is maintained. These are

shown in the following list:

Local Checkpoint (LCP): This is a checkpoint that is specific to a single node; however, LCPs take place for all nodes in the cluster more or less concurrently. An LCP involves saving all of a node's data to disk, and so usually occurs every few minutes. The precise interval varies, and depends upon the amount of data stored by the node, the level of cluster activity, and other factors.

Global Checkpoint (GCP): A GCP occurs every few seconds, when transactions for all nodes are synchronized and the redo-log is flushed to disk.

For more information about the files and directories created by local checkpoints and global checkpoints, see MySQL Cluster Data Node File System Directory Files.

This section discusses the manner in which MySQL Cluster divides and duplicates data for storage.

A number of concepts central to an understanding of this topic are discussed in the next few paragraphs.

(Data) Node. An ndbd process, which stores a replica —that is, a copy of the partition (see below) assigned to the node group of which the node is a member.

Each data node should be located on a separate computer. While it is also possible to host multiple ndbd processes on a single computer, such a configuration is not supported.

It is common for the terms “node” and “data node” to be used interchangeably when referring to an ndbd process; where mentioned, management nodes (ndb_mgmd processes) and SQL nodes (mysqld processes) are specified as such in this discussion.

Node Group. A node group consists of one or more nodes, and stores partitions, or sets of replicas (see next item).

The number of node groups in a MySQL Cluster is not directly

configurable; it is a function of the number of data nodes and of

the number of replicas

(NoOfReplicas

configuration parameter), as shown here:

[number_of_node_groups] =number_of_data_nodes/NoOfReplicas

Thus, a MySQL Cluster with 4 data nodes has 4 node groups if

NoOfReplicas is set to 1

in the config.ini file, 2 node groups if

NoOfReplicas is set to 2,

and 1 node group if

NoOfReplicas is set to 4.

Replicas are discussed later in this section; for more information

about NoOfReplicas, see

Section 18.3.2.6, “Defining MySQL Cluster Data Nodes”.

All node groups in a MySQL Cluster must have the same number of data nodes.

You can add new node groups (and thus new data nodes) online, to a running MySQL Cluster; see Section 18.5.13, “Adding MySQL Cluster Data Nodes Online”, for more information.

Partition. This is a portion of the data stored by the cluster. There are as many cluster partitions as nodes participating in the cluster. Each node is responsible for keeping at least one copy of any partitions assigned to it (that is, at least one replica) available to the cluster.

A replica belongs entirely to a single node; a node can (and usually does) store several replicas.

NDB and user-defined partitioning.

MySQL Cluster normally partitions

NDBCLUSTER tables automatically.

However, it is also possible to employ user-defined partitioning

with NDBCLUSTER tables. This is

subject to the following limitations:

Only the

KEYandLINEAR KEYpartitioning schemes are supported in production withNDBtables.When using ndbd, the maximum number of partitions that may be defined explicitly for any

NDBtable is8 * [. (The number of node groups in a MySQL Cluster is determined as discussed previously in this section.)number of node groups]When using ndbmtd, this maximum is also affected by the number of local query handler threads, which is determined by the value of the

MaxNoOfExecutionThreadsconfiguration parameter. In such cases, the maximum number of partitions that may be defined explicitly for anNDBtable is equal to4 * MaxNoOfExecutionThreads * [.number of node groups]See Section 18.4.3, “ndbmtd — The MySQL Cluster Data Node Daemon (Multi-Threaded)”, for more information.

For more information relating to MySQL Cluster and user-defined partitioning, see Section 18.1.6, “Known Limitations of MySQL Cluster”, and Section 19.5.2, “Partitioning Limitations Relating to Storage Engines”.

Replica. This is a copy of a cluster partition. Each node in a node group stores a replica. Also sometimes known as a partition replica. The number of replicas is equal to the number of nodes per node group.

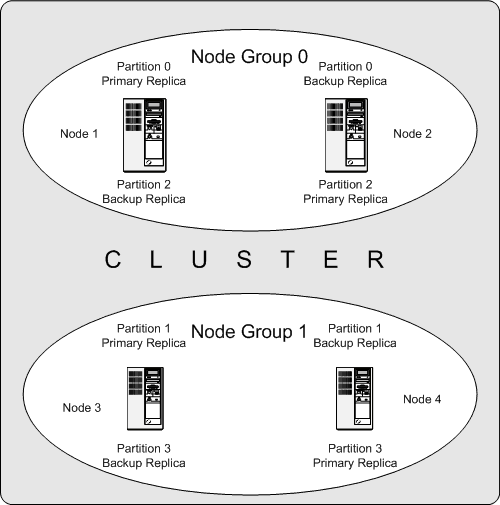

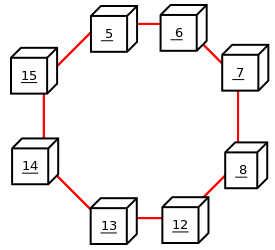

The following diagram illustrates a MySQL Cluster with four data nodes, arranged in two node groups of two nodes each; nodes 1 and 2 belong to node group 0, and nodes 3 and 4 belong to node group 1. Note that only data (ndbd) nodes are shown here; although a working cluster requires an ndb_mgm process for cluster management and at least one SQL node to access the data stored by the cluster, these have been omitted in the figure for clarity.

The data stored by the cluster is divided into four partitions, numbered 0, 1, 2, and 3. Each partition is stored—in multiple copies—on the same node group. Partitions are stored on alternate node groups as follows:

Partition 0 is stored on node group 0; a primary replica (primary copy) is stored on node 1, and a backup replica (backup copy of the partition) is stored on node 2.

Partition 1 is stored on the other node group (node group 1); this partition's primary replica is on node 3, and its backup replica is on node 4.

Partition 2 is stored on node group 0. However, the placing of its two replicas is reversed from that of Partition 0; for Partition 2, the primary replica is stored on node 2, and the backup on node 1.

Partition 3 is stored on node group 1, and the placement of its two replicas are reversed from those of partition 1. That is, its primary replica is located on node 4, with the backup on node 3.

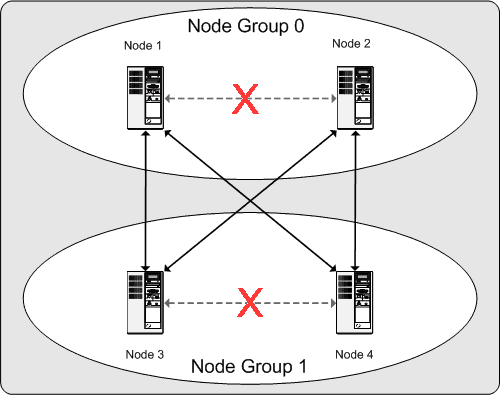

What this means regarding the continued operation of a MySQL Cluster is this: so long as each node group participating in the cluster has at least one node operating, the cluster has a complete copy of all data and remains viable. This is illustrated in the next diagram.

In this example, where the cluster consists of two node groups of two nodes each, any combination of at least one node in node group 0 and at least one node in node group 1 is sufficient to keep the cluster “alive” (indicated by arrows in the diagram). However, if both nodes from either node group fail, the remaining two nodes are not sufficient (shown by the arrows marked out with an X); in either case, the cluster has lost an entire partition and so can no longer provide access to a complete set of all cluster data.

One of the strengths of MySQL Cluster is that it can be run on commodity hardware and has no unusual requirements in this regard, other than for large amounts of RAM, due to the fact that all live data storage is done in memory. (It is possible to reduce this requirement using Disk Data tables—see Section 18.5.12, “MySQL Cluster Disk Data Tables”, for more information about these.) Naturally, multiple and faster CPUs can enhance performance. Memory requirements for other MySQL Cluster processes are relatively small.

The software requirements for MySQL Cluster are also modest. Host operating systems do not require any unusual modules, services, applications, or configuration to support MySQL Cluster. For supported operating systems, a standard installation should be sufficient. The MySQL software requirements are simple: all that is needed is a production release of MySQL Cluster. It is not strictly necessary to compile MySQL yourself merely to be able to use MySQL Cluster. We assume that you are using the binaries appropriate to your platform, available from the MySQL Cluster software downloads page at http://dev.mysql.com/downloads/cluster/.

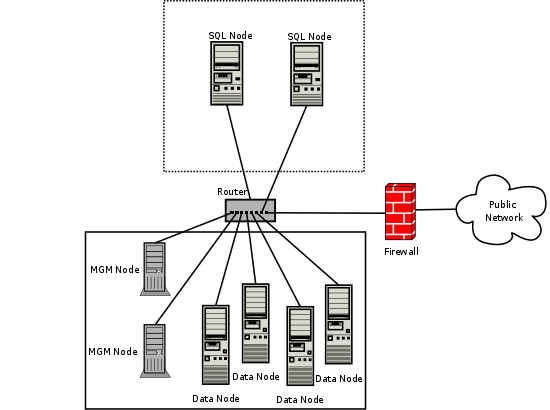

For communication between nodes, MySQL Cluster supports TCP/IP networking in any standard topology, and the minimum expected for each host is a standard 100 Mbps Ethernet card, plus a switch, hub, or router to provide network connectivity for the cluster as a whole. We strongly recommend that a MySQL Cluster be run on its own subnet which is not shared with machines not forming part of the cluster for the following reasons:

Security. Communications between MySQL Cluster nodes are not encrypted or shielded in any way. The only means of protecting transmissions within a MySQL Cluster is to run your MySQL Cluster on a protected network. If you intend to use MySQL Cluster for Web applications, the cluster should definitely reside behind your firewall and not in your network's De-Militarized Zone (DMZ) or elsewhere.

See Section 18.5.11.1, “MySQL Cluster Security and Networking Issues”, for more information.

Efficiency. Setting up a MySQL Cluster on a private or protected network enables the cluster to make exclusive use of bandwidth between cluster hosts. Using a separate switch for your MySQL Cluster not only helps protect against unauthorized access to MySQL Cluster data, it also ensures that MySQL Cluster nodes are shielded from interference caused by transmissions between other computers on the network. For enhanced reliability, you can use dual switches and dual cards to remove the network as a single point of failure; many device drivers support failover for such communication links.

Network communication and latency. MySQL Cluster requires communication between data nodes and API nodes (including SQL nodes), as well as between data nodes and other data nodes, to execute queries and updates. Communication latency between these processes can directly affect the observed performance and latency of user queries. In addition, to maintain consistency and service despite the silent failure of nodes, MySQL Cluster uses heartbeating and timeout mechanisms which treat an extended loss of communication from a node as node failure. This can lead to reduced redundancy. Recall that, to maintain data consistency, a MySQL Cluster shuts down when the last node in a node group fails. Thus, to avoid increasing the risk of a forced shutdown, breaks in communication between nodes should be avoided wherever possible.

The failure of a data or API node results in the abort of all uncommitted transactions involving the failed node. Data node recovery requires synchronization of the failed node's data from a surviving data node, and re-establishment of disk-based redo and checkpoint logs, before the data node returns to service. This recovery can take some time, during which the Cluster operates with reduced redundancy.

Heartbeating relies on timely generation of heartbeat signals by all nodes. This may not be possible if the node is overloaded, has insufficient machine CPU due to sharing with other programs, or is experiencing delays due to swapping. If heartbeat generation is sufficiently delayed, other nodes treat the node that is slow to respond as failed.

This treatment of a slow node as a failed one may or may not be

desirable in some circumstances, depending on the impact of the

node's slowed operation on the rest of the cluster. When

setting timeout values such as

HeartbeatIntervalDbDb and

HeartbeatIntervalDbApi for

MySQL Cluster, care must be taken care to achieve quick detection,

failover, and return to service, while avoiding potentially

expensive false positives.

Where communication latencies between data nodes are expected to be higher than would be expected in a LAN environment (on the order of 100 µs), timeout parameters must be increased to ensure that any allowed periods of latency periods are well within configured timeouts. Increasing timeouts in this way has a corresponding effect on the worst-case time to detect failure and therefore time to service recovery.

LAN environments can typically be configured with stable low latency, and such that they can provide redundancy with fast failover. Individual link failures can be recovered from with minimal and controlled latency visible at the TCP level (where MySQL Cluster normally operates). WAN environments may offer a range of latencies, as well as redundancy with slower failover times. Individual link failures may require route changes to propagate before end-to-end connectivity is restored. At the TCP level this can appear as large latencies on individual channels. The worst-case observed TCP latency in these scenarios is related to the worst-case time for the IP layer to reroute around the failures.

SCI support. It is also possible to use the high-speed Scalable Coherent Interface (SCI) with MySQL Cluster, but this is not a requirement. See Section 18.3.5, “Using High-Speed Interconnects with MySQL Cluster”, for more about this protocol and its use with MySQL Cluster.

In this section, we discuss changes in the implementation of MySQL Cluster in MySQL MySQL Cluster NDB 7.2, as compared to MySQL Cluster NDB 7.1 and earlier releases. Changes and features most likely to be of interest are shown in the following table:

| MySQL Cluster NDB 7.2 |

|---|

| MySQL Cluster NDB 7.2.1 and later MySQL Cluster NDB 7.2 releases are based on MySQL 5.5. For more information about new features in MySQL Server 5.5, see Section 1.4, “What Is New in MySQL 5.5”. |

Version 2 binary log row events, to provide support for improvements in

MySQL Cluster Replication conflict detection (see next

item). A given mysqld can be made to

use Version 1 or Version 2 binary logging row events with

the

--log-bin-use-v1-row-events

option. |

Two new “primary wins” conflict detection and resolution

functions

NDB$EPOCH()

and

NDB$EPOCH_TRANS()

for use in replication setups with 2 MySQL Clusters. For

more information, see

Section 18.6, “MySQL Cluster Replication”. |

| Distribution of MySQL users and privileges across MySQL Cluster SQL nodes is now supported—see Section 18.5.14, “Distributed MySQL Privileges for MySQL Cluster”. |

| Improved support for distributed pushed-down joins, which greatly improve performance for many joins that can be executed in parallel on the data nodes. |

Default values for a number of data node configuration parameters such

as

HeartbeatIntervalDbDb

and

ArbitrationTimeout

have been improved. |

Support for the Memcache API using the loadable ndbmemcache storage

engine. See ndbmemcache—Memcache API for MySQL Cluster. |

This section contains information about MySQL Cluster NDB 7.2 releases through 5.5.43-ndb-7.2.21, which is currently available for use in production beginning with MySQL Cluster NDB 7.2.4. MySQL Cluster NDB 7.1, MySQL Cluster NDB 7.0, and MySQL Cluster NDB 6.3 are previous GA release series; although these are still supported, we recommend that new deployments use MySQL Cluster NDB 7.2. For information about MySQL Cluster NDB 7.1 and previous releases, see MySQL Cluster NDB 6.1 - 7.1, in the MySQL 5.1 Manual.

The following improvements to MySQL Cluster have been made in MySQL Cluster NDB 7.2:

Based on MySQL Server 5.5. Previous MySQL Cluster release series, including MySQL Cluster NDB 7.1, used MySQL 5.1 as a base. Beginning with MySQL Cluster NDB 7.2.1, MySQL Cluster NDB 7.2 is based on MySQL Server 5.5, so that MySQL Cluster users can benefit from MySQL 5.5's improvements in scalability and performance monitoring. As with MySQL 5.5, MySQL Cluster NDB 7.2.1 and later use CMake for configuring and building from source in place of GNU Autotools (used in MySQL 5.1 and MySQL Cluster releases based on MySQL 5.1). For more information about changes and improvements in MySQL 5.5, see Section 1.4, “What Is New in MySQL 5.5”.

Conflict detection using GCI Reflection. MySQL Cluster Replication implements a new “primary wins” conflict detection and resolution mechanism. GCI Reflection applies in two-cluster circulation “active-active” replication setups, tracking the order in which changes are applied on the MySQL Cluster designated as primary relative to changes originating on the other MySQL Cluster (referred to as the secondary). This relative ordering is used to determine whether changes originating on the slave are concurrent with any changes that originate locally, and are therefore potentially in conflict. Two new conflict detection functions are added: When using

NDB$EPOCH(), rows that are out of sync on the secondary are realigned with those on the primary; withNDB$EPOCH_TRANS(), this realignment is applied to transactions. For more information, see Section 18.6.11, “MySQL Cluster Replication Conflict Resolution”.Version 2 binary log row events. A new format for binary log row events, known as Version 2 binary log row events, provides support for improvements in MySQL Cluster Replication conflict detection (see previous item) and is intended to facilitate further improvements in MySQL Replication. You can cause a given mysqld use Version 1 or Version 2 binary logging row events with the

--log-bin-use-v1-row-eventsoption. For backward compatibility, Version 2 binary log row events are also available in MySQL Cluster NDB 7.0 (7.0.27 and later) and MySQL Cluster NDB 7.1 (7.1.16 and later). However, MySQL Cluster NDB 7.0 and MySQL Cluster NDB 7.1 continue to use Version 1 binary log row events as the default, whereas the default in MySQL Cluster NDB 7.2.1 and later is use Version 2 row events for binary logging.Distribution of MySQL users and privileges. Automatic distribution of MySQL users and privileges across all SQL nodes in a given MySQL Cluster is now supported. To enable this support, you must first import an SQL script

share/mysql/ndb_dist_priv.sqlthat is included with the MySQL Cluster NDB 7.2 distribution. This script creates several stored procedures which you can use to enable privilege distribution and perform related tasks.When a new MySQL Server joins a MySQL Cluster where privilege distribution is in effect, it also participates in the privilege distribution automatically.

Once privilege distribution is enabled, all changes to the grant tables made on any mysqld attached to the cluster are immediately available on any other attached MySQL Servers. This is true whether the changes are made using

CREATE USER,GRANT, or any of the other statements described elsewhere in this Manual (see Section 13.7.1, “Account Management Statements”.) This includes privileges relating to stored routines and views; however, automatic distribution of the views or stored routines themselves is not currently supported.For more information, see Section 18.5.14, “Distributed MySQL Privileges for MySQL Cluster”.

Distributed pushed-down joins. Many joins can now be pushed down to the NDB kernel for processing on MySQL Cluster data nodes. Previously, a join was handled in MySQL Cluster by means of repeated accesses of

NDBby the SQL node; however, when pushed-down joins are enabled, a pushable join is sent in its entirety to the data nodes, where it can be distributed among the data nodes and executed in parallel on multiple copies of the data, with a single, merged result being returned to mysqld. This can reduce greatly the number of round trips between an SQL node and the data nodes required to handle such a join, leading to greatly improved performance of join processing.It is possible to determine when joins can be pushed down to the data nodes by examining the join with

EXPLAIN. A number of new system status variables (Ndb_pushed_queries_defined,Ndb_pushed_queries_dropped,Ndb_pushed_queries_executed, andNdb_pushed_reads) and additions to thecounterstable (in thendbinfoinformation database) can also be helpful in determining when and how well joins are being pushed down.More information and examples are available in the description of the

ndb_join_pushdownserver system variable. See also the description of the status variables referenced in the previous paragraph, as well as Section 18.5.10.7, “The ndbinfo counters Table”.Improved default values for data node configuration parameters. In order to provide more resiliency to environmental issues and better handling of some potential failure scenarios, and to perform more reliably with increases in memory and other resource requirements brought about by recent improvements in join handling by

NDB, the default values for a number of MySQL Cluster data node configuration parameters have been changed. The parameters and changes are described in the following list:HeartbeatIntervalDbDb: Default increased from 1500 ms to 5000 ms.ArbitrationTimeout: Default increased from 3000 ms to 7500 ms.TimeBetweenEpochsTimeout: Now effectively disabled by default (default changed from 4000 ms to 0).SharedGlobalMemory: Default increased from 20 MB to 128 MB.MaxParallelScansPerFragment: Default increased from 32 to 256.CrashOnCorruptedTuplechanged fromFALSEtoTRUE.Beginning with MySQL Cluster NDB 7.2.10,

DefaultOperationRedoProblemActionchanged fromABORTtoQUEUE.

In addition, the value computed for

MaxNoOfLocalScanswhen this parameter is not set inconfig.inihas been increased by a factor of 4.Fail-fast data nodes. Beginning with MySQL Cluster NDB 7.2.1, data nodes handle corrupted tuples in a fail-fast manner by default. This is a change from previous versions of MySQL Cluster where this behavior had to be enabled explicitly by enabling the

CrashOnCorruptedTupleconfiguration parameter. In MySQL Cluster NDB 7.2.1 and later, this parameter is enabled by default and must be explicitly disabled, in which case data nodes merely log a warning whenever they detect a corrupted tuple.Memcache API support (ndbmemcache). The Memcached server is a distributed in-memory caching server that uses a simple text-based protocol. It is often employed with key-value stores. The Memcache API for MySQL Cluster, available beginning with MySQL Cluster NDB 7.2.2, is implemented as a loadable storage engine for memcached version 1.6 and later. This API can be used to access a persistent MySQL Cluster data store employing the memcache protocol. It is also possible for the memcached server to provide a strictly defined interface to existing MySQL Cluster tables.

Each memcache server can both cache data locally and access data stored in MySQL Cluster directly. Caching policies are configurable. For more information, see

ndbmemcache—Memcache API for MySQL Cluster, in the MySQL Cluster API Developers Guide.Rows per partition limit removed. Previously it was possible to store a maximum of 46137488 rows in a single MySQL Cluster partition—that is, per data node. Beginning with MySQL Cluster NDB 7.2.9, this limitation has been lifted, and there is no longer any practical upper limit to this number. (Bug #13844405, Bug #14000373)

MySQL Cluster NDB 7.2 is also supported by MySQL Cluster Manager, which provides an advanced command-line interface that can simplify many complex MySQL Cluster management tasks. See MySQL™ Cluster Manager 1.3.5 User Manual, for more information.

MySQL Server offers a number of choices in storage engines. Since

both NDBCLUSTER and

InnoDB can serve as transactional

MySQL storage engines, users of MySQL Server sometimes become

interested in MySQL Cluster. They see

NDB as a possible alternative or

upgrade to the default InnoDB storage

engine in MySQL 5.5. While NDB and

InnoDB share common characteristics,

there are differences in architecture and implementation, so that

some existing MySQL Server applications and usage scenarios can be

a good fit for MySQL Cluster, but not all of them.

In this section, we discuss and compare some characteristics of

the NDB storage engine used by MySQL

Cluster NDB 7.2 with InnoDB used in

MySQL 5.5. The next few sections provide a technical comparison.

In many instances, decisions about when and where to use MySQL

Cluster must be made on a case-by-case basis, taking all factors

into consideration. While it is beyond the scope of this

documentation to provide specifics for every conceivable usage

scenario, we also attempt to offer some very general guidance on

the relative suitability of some common types of applications for

NDB as opposed to

InnoDB backends.

Recent MySQL Cluster NDB 7.2 releases use a

mysqld based on MySQL 5.5, including support

for InnoDB 1.1. While it is possible

to use InnoDB tables with MySQL Cluster, such

tables are not clustered. It is also not possible to use programs

or libraries from a MySQL Cluster NDB 7.2 distribution with MySQL

Server 5.5, or the reverse.

While it is also true that some types of common business

applications can be run either on MySQL Cluster or on MySQL Server

(most likely using the InnoDB storage

engine), there are some important architectural and implementation

differences. Section 18.1.5.1, “Differences Between the NDB and InnoDB Storage Engines”,

provides a summary of the these differences. Due to the

differences, some usage scenarios are clearly more suitable for

one engine or the other; see

Section 18.1.5.2, “NDB and InnoDB Workloads”. This in turn

has an impact on the types of applications that better suited for

use with NDB or

InnoDB. See

Section 18.1.5.3, “NDB and InnoDB Feature Usage Summary”, for a comparison

of the relative suitability of each for use in common types of

database applications.

For information about the relative characteristics of the

NDB and

MEMORY storage engines, see

When to Use MEMORY or MySQL Cluster.

See Chapter 15, Alternative Storage Engines, for additional information about MySQL storage engines.

The MySQL Cluster NDB storage

engine is implemented using a distributed, shared-nothing

architecture, which causes it to behave differently from

InnoDB in a number of ways. For

those unaccustomed to working with

NDB, unexpected behaviors can arise

due to its distributed nature with regard to transactions,

foreign keys, table limits, and other characteristics. These are

shown in the following table:

Feature |

|

MySQL Cluster |

|---|---|---|

MySQL Server Version | 5.5 | MySQL Cluster NDB 7.2: 5.5 MySQL Cluster NDB 7.3: 5.6 |

|

|

|

MySQL Cluster Version | N/A |

|

Storage Limits | 64TB | 3TB (Practical upper limit based on 48 data nodes with 64GB RAM each; can be increased with disk-based data and BLOBs) |

Foreign Keys | Yes | Available in MySQL Cluster NDB 7.3 and later.

(Prior to MySQL Cluster NDB 7.3: Ignored, as with

|

Transactions | All standard types | |

MVCC | Yes | No |

Data Compression | Yes | No (MySQL Cluster checkpoint and backup files can be compressed) |

Large Row Support (> 14K) |

Supported for (Using these types to store very large amounts of data can lower MySQL Cluster performance) | |

Replication Support | Asynchronous and semisynchronous replication using MySQL Replication | Automatic synchronous replication within a MySQL Cluster. Asynchronous replication between MySQL Clusters, using MySQL Replication |

Scaleout for Read Operations | Yes (MySQL Replication) | Yes (Automatic partitioning in MySQL Cluster; MySQL Replication) |

Scaleout for Write Operations | Requires application-level partitioning (sharding) | Yes (Automatic partitioning in MySQL Cluster is transparent to applications) |

High Availability (HA) | Requires additional software | Yes (Designed for 99.999% uptime) |

Node Failure Recovery and Failover | Requires additional software | Automatic (Key element in MySQL Cluster architecture) |

Time for Node Failure Recovery | 30 seconds or longer | Typically < 1 second |

Real-Time Performance | No | Yes |

In-Memory Tables | No | Yes (Some data can optionally be stored on disk; both in-memory and disk data storage are durable) |

NoSQL Access to Storage Engine | Native memcached interface in development (see the MySQL Dev Zone article MySQL Cluster 7.2 (DMR2): NoSQL, Key/Value, Memcached) | Yes Multiple APIs, including Memcached, Node.js/JavaScript, Java, JPA, C++, and HTTP/REST |

Concurrent and Parallel Writes | Not supported | Up to 48 writers, optimized for concurrent writes |

Conflict Detection and Resolution (Multiple Replication Masters) | No | Yes |

Hash Indexes | No | Yes |

Online Addition of Nodes | Read-only replicas using MySQL Replication | Yes (all node types) |

Online Upgrades | No | Yes |

Online Schema Modifications | No. | Yes. |

MySQL Cluster has a range of unique attributes that make it

ideal to serve applications requiring high availability, fast

failover, high throughput, and low latency. Due to its

distributed architecture and multi-node implementation, MySQL

Cluster also has specific constraints that may keep some

workloads from performing well. A number of major differences in

behavior between the NDB and

InnoDB storage engines with regard

to some common types of database-driven application workloads

are shown in the following table::

Workload |

MySQL Cluster ( | |

|---|---|---|

High-Volume OLTP Applications | Yes | Yes |

DSS Applications (data marts, analytics) | Yes | Limited (Join operations across OLTP datasets not exceeding 3TB in size) |

Custom Applications | Yes | Yes |

Packaged Applications | Yes | Limited (should be mostly primary key access).

Note

MySQL Cluster NDB 7.3 supports foreign keys. |

In-Network Telecoms Applications (HLR, HSS, SDP) | No | Yes |

Session Management and Caching | Yes | Yes |

E-Commerce Applications | Yes | Yes |

User Profile Management, AAA Protocol | Yes | Yes |

When comparing application feature requirements to the

capabilities of InnoDB with

NDB, some are clearly more

compatible with one storage engine than the other.

The following table lists supported application features according to the storage engine to which each feature is typically better suited.

Preferred application requirements for

|

Preferred application requirements for

|

|---|---|

|

|

- 18.1.6.1 Noncompliance with SQL Syntax in MySQL Cluster

- 18.1.6.2 Limits and Differences of MySQL Cluster from Standard MySQL Limits

- 18.1.6.3 Limits Relating to Transaction Handling in MySQL Cluster

- 18.1.6.4 MySQL Cluster Error Handling

- 18.1.6.5 Limits Associated with Database Objects in MySQL Cluster

- 18.1.6.6 Unsupported or Missing Features in MySQL Cluster

- 18.1.6.7 Limitations Relating to Performance in MySQL Cluster

- 18.1.6.8 Issues Exclusive to MySQL Cluster

- 18.1.6.9 Limitations Relating to MySQL Cluster Disk Data Storage

- 18.1.6.10 Limitations Relating to Multiple MySQL Cluster Nodes

- 18.1.6.11 Previous MySQL Cluster Issues Resolved in MySQL 5.1, MySQL Cluster NDB 6.x, and MySQL Cluster NDB 7.x

In the sections that follow, we discuss known limitations in

current releases of MySQL Cluster as compared with the features

available when using the MyISAM and

InnoDB storage engines. If you check the

“Cluster” category in the MySQL bugs database at

http://bugs.mysql.com, you can find known bugs in

the following categories under “MySQL Server:” in the

MySQL bugs database at http://bugs.mysql.com, which

we intend to correct in upcoming releases of MySQL Cluster:

MySQL Cluster

Cluster Direct API (NDBAPI)

Cluster Disk Data

Cluster Replication

ClusterJ

This information is intended to be complete with respect to the conditions just set forth. You can report any discrepancies that you encounter to the MySQL bugs database using the instructions given in Section 1.7, “How to Report Bugs or Problems”. If we do not plan to fix the problem in MySQL Cluster NDB 7.2, we will add it to the list.

See Section 18.1.6.11, “Previous MySQL Cluster Issues Resolved in MySQL 5.1, MySQL Cluster NDB 6.x, and MySQL Cluster NDB 7.x” for a list of issues in MySQL Cluster in MySQL 5.1 that have been resolved in the current version.

Limitations and other issues specific to MySQL Cluster Replication are described in Section 18.6.3, “Known Issues in MySQL Cluster Replication”.

Some SQL statements relating to certain MySQL features produce

errors when used with NDB tables,

as described in the following list:

Temporary tables. Temporary tables are not supported. Trying either to create a temporary table that uses the

NDBstorage engine or to alter an existing temporary table to useNDBfails with the error Table storage engine 'ndbcluster' does not support the create option 'TEMPORARY'.Indexes and keys in NDB tables. Keys and indexes on MySQL Cluster tables are subject to the following limitations:

Column width. Attempting to create an index on an

NDBtable column whose width is greater than 3072 bytes succeeds, but only the first 3072 bytes are actually used for the index. In such cases, a warning Specified key was too long; max key length is 3072 bytes is issued, and aSHOW CREATE TABLEstatement shows the length of the index as 3072.TEXT and BLOB columns. You cannot create indexes on

NDBtable columns that use any of theTEXTorBLOBdata types.FULLTEXT indexes. The

NDBstorage engine does not supportFULLTEXTindexes, which are possible forMyISAMtables only.However, you can create indexes on

VARCHARcolumns ofNDBtables.USING HASH keys and NULL. Using nullable columns in unique keys and primary keys means that queries using these columns are handled as full table scans. To work around this issue, make the column

NOT NULL, or re-create the index without theUSING HASHoption.Prefixes. There are no prefix indexes; only entire columns can be indexed. (The size of an

NDBcolumn index is always the same as the width of the column in bytes, up to and including 3072 bytes, as described earlier in this section. Also see Section 18.1.6.6, “Unsupported or Missing Features in MySQL Cluster”, for additional information.)BIT columns. A

BITcolumn cannot be a primary key, unique key, or index, nor can it be part of a composite primary key, unique key, or index.AUTO_INCREMENT columns. Like other MySQL storage engines, the

NDBstorage engine can handle a maximum of oneAUTO_INCREMENTcolumn per table. However, in the case of a Cluster table with no explicit primary key, anAUTO_INCREMENTcolumn is automatically defined and used as a “hidden” primary key. For this reason, you cannot define a table that has an explicitAUTO_INCREMENTcolumn unless that column is also declared using thePRIMARY KEYoption. Attempting to create a table with anAUTO_INCREMENTcolumn that is not the table's primary key, and using theNDBstorage engine, fails with an error.

MySQL Cluster and geometry data types. Geometry data types (

WKTandWKB) are supported forNDBtables. However, spatial indexes are not supported.Character sets and binary log files. Currently, the

ndb_apply_statusandndb_binlog_indextables are created using thelatin1(ASCII) character set. Because names of binary logs are recorded in this table, binary log files named using non-Latin characters are not referenced correctly in these tables. This is a known issue, which we are working to fix. (Bug #50226)To work around this problem, use only Latin-1 characters when naming binary log files or setting any the

--basedir,--log-bin, or--log-bin-indexoptions.Creating NDB tables with user-defined partitioning. Support for user-defined partitioning in MySQL Cluster is restricted to [

LINEAR]KEYpartitioning. Using any other partitioning type withENGINE=NDBorENGINE=NDBCLUSTERin aCREATE TABLEstatement results in an error.It is possible to override this restriction, but doing so is not supported for use in production settings. For details, see User-defined partitioning and the NDB storage engine (MySQL Cluster).

Default partitioning scheme. All MySQL Cluster tables are by default partitioned by

KEYusing the table's primary key as the partitioning key. If no primary key is explicitly set for the table, the “hidden” primary key automatically created by theNDBstorage engine is used instead. For additional discussion of these and related issues, see Section 19.2.5, “KEY Partitioning”.CREATE TABLEandALTER TABLEstatements that would cause a user-partitionedNDBCLUSTERtable not to meet either or both of the following two requirements are not permitted, and fail with an error:The table must have an explicit primary key.

All columns listed in the table's partitioning expression must be part of the primary key.

Exception. If a user-partitioned

NDBCLUSTERtable is created using an empty column-list (that is, usingPARTITION BY [LINEAR] KEY()), then no explicit primary key is required.Maximum number of partitions for NDBCLUSTER tables. The maximum number of partitions that can defined for a

NDBCLUSTERtable when employing user-defined partitioning is 8 per node group. (See Section 18.1.2, “MySQL Cluster Nodes, Node Groups, Replicas, and Partitions”, for more information about MySQL Cluster node groups.DROP PARTITION not supported. It is not possible to drop partitions from

NDBtables usingALTER TABLE ... DROP PARTITION. The other partitioning extensions toALTER TABLE—ADD PARTITION,REORGANIZE PARTITION, andCOALESCE PARTITION—are supported for Cluster tables, but use copying and so are not optimized. See Section 19.3.1, “Management of RANGE and LIST Partitions” and Section 13.1.7, “ALTER TABLE Syntax”.Row-based replication. When using row-based replication with MySQL Cluster, binary logging cannot be disabled. That is, the

NDBstorage engine ignores the value ofsql_log_bin. (Bug #16680)

In this section, we list limits found in MySQL Cluster that either differ from limits found in, or that are not found in, standard MySQL.

Memory usage and recovery.

Memory consumed when data is inserted into an

NDB table is not automatically

recovered when deleted, as it is with other storage engines.

Instead, the following rules hold true:

A

DELETEstatement on anNDBtable makes the memory formerly used by the deleted rows available for re-use by inserts on the same table only. However, this memory can be made available for general re-use by performingOPTIMIZE TABLE.A rolling restart of the cluster also frees any memory used by deleted rows. See Section 18.5.5, “Performing a Rolling Restart of a MySQL Cluster”.

A

DROP TABLEorTRUNCATE TABLEoperation on anNDBtable frees the memory that was used by this table for re-use by anyNDBtable, either by the same table or by anotherNDBtable.NoteRecall that

TRUNCATE TABLEdrops and re-creates the table. See Section 13.1.33, “TRUNCATE TABLE Syntax”.Limits imposed by the cluster's configuration. A number of hard limits exist which are configurable, but available main memory in the cluster sets limits. See the complete list of configuration parameters in Section 18.3.2, “MySQL Cluster Configuration Files”. Most configuration parameters can be upgraded online. These hard limits include:

Database memory size and index memory size (

DataMemoryandIndexMemory, respectively).DataMemoryis allocated as 32KB pages. As eachDataMemorypage is used, it is assigned to a specific table; once allocated, this memory cannot be freed except by dropping the table.See Section 18.3.2.6, “Defining MySQL Cluster Data Nodes”, for more information.

The maximum number of operations that can be performed per transaction is set using the configuration parameters

MaxNoOfConcurrentOperationsandMaxNoOfLocalOperations.NoteBulk loading,

TRUNCATE TABLE, andALTER TABLEare handled as special cases by running multiple transactions, and so are not subject to this limitation.Different limits related to tables and indexes. For example, the maximum number of ordered indexes in the cluster is determined by

MaxNoOfOrderedIndexes, and the maximum number of ordered indexes per table is 16.

Node and data object maximums. The following limits apply to numbers of cluster nodes and metadata objects:

The maximum number of data nodes is 48.

A data node must have a node ID in the range of 1 to 48, inclusive. (Management and API nodes may use node IDs in the range 1 to 255, inclusive.)

The total maximum number of nodes in a MySQL Cluster is 255. This number includes all SQL nodes (MySQL Servers), API nodes (applications accessing the cluster other than MySQL servers), data nodes, and management servers.

The maximum number of metadata objects in current versions of MySQL Cluster is 20320. This limit is hard-coded.

See Section 18.1.6.11, “Previous MySQL Cluster Issues Resolved in MySQL 5.1, MySQL Cluster NDB 6.x, and MySQL Cluster NDB 7.x”, for more information.

A number of limitations exist in MySQL Cluster with regard to the handling of transactions. These include the following:

Transaction isolation level. The

NDBCLUSTERstorage engine supports only theREAD COMMITTEDtransaction isolation level. (InnoDB, for example, supportsREAD COMMITTED,READ UNCOMMITTED,REPEATABLE READ, andSERIALIZABLE.) See Section 18.5.3.4, “MySQL Cluster Backup Troubleshooting”, for information on how this can affect backing up and restoring Cluster databases.)Transactions and BLOB or TEXT columns.

NDBCLUSTERstores only part of a column value that uses any of MySQL'sBLOBorTEXTdata types in the table visible to MySQL; the remainder of theBLOBorTEXTis stored in a separate internal table that is not accessible to MySQL. This gives rise to two related issues of which you should be aware whenever executingSELECTstatements on tables that contain columns of these types:For any

SELECTfrom a MySQL Cluster table: If theSELECTincludes aBLOBorTEXTcolumn, theREAD COMMITTEDtransaction isolation level is converted to a read with read lock. This is done to guarantee consistency.For any

SELECTwhich uses a unique key lookup to retrieve any columns that use any of theBLOBorTEXTdata types and that is executed within a transaction, a shared read lock is held on the table for the duration of the transaction—that is, until the transaction is either committed or aborted.This issue does not occur for queries that use index or table scans, even against

NDBtables havingBLOBorTEXTcolumns.For example, consider the table

tdefined by the followingCREATE TABLEstatement:CREATE TABLE t ( a INT NOT NULL AUTO_INCREMENT PRIMARY KEY, b INT NOT NULL, c INT NOT NULL, d TEXT, INDEX i(b), UNIQUE KEY u(c) ) ENGINE = NDB,Either of the following queries on

tcauses a shared read lock, because the first query uses a primary key lookup and the second uses a unique key lookup:SELECT * FROM t WHERE a = 1; SELECT * FROM t WHERE c = 1;

However, none of the four queries shown here causes a shared read lock:

SELECT * FROM t WHERE b = 1; SELECT * FROM t WHERE d = '1'; SELECT * FROM t; SELECT b,c WHERE a = 1;

This is because, of these four queries, the first uses an index scan, the second and third use table scans, and the fourth, while using a primary key lookup, does not retrieve the value of any

BLOBorTEXTcolumns.You can help minimize issues with shared read locks by avoiding queries that use unique key lookups that retrieve

BLOBorTEXTcolumns, or, in cases where such queries are not avoidable, by committing transactions as soon as possible afterward.

Rollbacks. There are no partial transactions, and no partial rollbacks of transactions. A duplicate key or similar error causes the entire transaction to be rolled back.

This behavior differs from that of other transactional storage engines such as

InnoDBthat may roll back individual statements.Transactions and memory usage. As noted elsewhere in this chapter, MySQL Cluster does not handle large transactions well; it is better to perform a number of small transactions with a few operations each than to attempt a single large transaction containing a great many operations. Among other considerations, large transactions require very large amounts of memory. Because of this, the transactional behavior of a number of MySQL statements is effected as described in the following list:

TRUNCATE TABLEis not transactional when used onNDBtables. If aTRUNCATE TABLEfails to empty the table, then it must be re-run until it is successful.DELETE FROM(even with noWHEREclause) is transactional. For tables containing a great many rows, you may find that performance is improved by using severalDELETE FROM ... LIMIT ...statements to “chunk” the delete operation. If your objective is to empty the table, then you may wish to useTRUNCATE TABLEinstead.LOAD DATA statements.

LOAD DATA INFILEis not transactional when used onNDBtables.ImportantWhen executing a

LOAD DATA INFILEstatement, theNDBengine performs commits at irregular intervals that enable better utilization of the communication network. It is not possible to know ahead of time when such commits take place.ALTER TABLE and transactions. When copying an

NDBtable as part of anALTER TABLE, the creation of the copy is nontransactional. (In any case, this operation is rolled back when the copy is deleted.)

Transactions and the COUNT() function. When using MySQL Cluster Replication, it is not possible to guarantee the transactional consistency of the

COUNT()function on the slave. In other words, when performing on the master a series of statements (INSERT,DELETE, or both) that changes the number of rows in a table within a single transaction, executingSELECT COUNT(*) FROMqueries on the slave may yield intermediate results. This is due to the fact thattableSELECT COUNT(...)may perform dirty reads, and is not a bug in theNDBstorage engine. (See Bug #31321 for more information.)

Starting, stopping, or restarting a node may give rise to temporary errors causing some transactions to fail. These include the following cases:

Temporary errors. When first starting a node, it is possible that you may see Error 1204 Temporary failure, distribution changed and similar temporary errors.

Errors due to node failure. The stopping or failure of any data node can result in a number of different node failure errors. (However, there should be no aborted transactions when performing a planned shutdown of the cluster.)

In either of these cases, any errors that are generated must be handled within the application. This should be done by retrying the transaction.

See also Section 18.1.6.2, “Limits and Differences of MySQL Cluster from Standard MySQL Limits”.

Some database objects such as tables and indexes have different

limitations when using the

NDBCLUSTER storage engine:

Database and table names. When using the

NDBstorage engine, the maximum allowed length both for database names and for table names is 63 characters.Number of database objects. The maximum number of all

NDBdatabase objects in a single MySQL Cluster—including databases, tables, and indexes—is limited to 20320.Attributes per table. The maximum number of attributes (that is, columns and indexes) that can belong to a given table is 512.

Attributes per key. The maximum number of attributes per key is 32.

Row size. The maximum permitted size of any one row is 14000 bytes (as of MySQL Cluster NDB 7.0). Each

BLOBorTEXTcolumn contributes 256 + 8 = 264 bytes to this total.

A number of features supported by other storage engines are not

supported for NDB tables. Trying to

use any of these features in MySQL Cluster does not cause errors

in or of itself; however, errors may occur in applications that

expects the features to be supported or enforced:

Foreign key constraints. Prior to MySQL Cluster NDB 7.3, the foreign key construct is ignored, just as it is by

MyISAMtables. Foreign keys are supported in MySQL Cluster NDB 7.3 and later.Index prefixes. Prefixes on indexes are not supported for

NDBCLUSTERtables. If a prefix is used as part of an index specification in a statement such asCREATE TABLE,ALTER TABLE, orCREATE INDEX, the prefix is ignored.Savepoints and rollbacks. Savepoints and rollbacks to savepoints are ignored as in

MyISAM.Durability of commits. There are no durable commits on disk. Commits are replicated, but there is no guarantee that logs are flushed to disk on commit.

Replication. Statement-based replication is not supported. Use

--binlog-format=ROW(or--binlog-format=MIXED) when setting up cluster replication. See Section 18.6, “MySQL Cluster Replication”, for more information.

See Section 18.1.6.3, “Limits Relating to Transaction Handling in MySQL Cluster”,

for more information relating to limitations on transaction

handling in NDB.

The following performance issues are specific to or especially pronounced in MySQL Cluster:

Range scans. There are query performance issues due to sequential access to the

NDBstorage engine; it is also relatively more expensive to do many range scans than it is with eitherMyISAMorInnoDB.Reliability of Records in range. The

Records in rangestatistic is available but is not completely tested or officially supported. This may result in nonoptimal query plans in some cases. If necessary, you can employUSE INDEXorFORCE INDEXto alter the execution plan. See Section 8.9.3, “Index Hints”, for more information on how to do this.Unique hash indexes. Unique hash indexes created with

USING HASHcannot be used for accessing a table ifNULLis given as part of the key.

The following are limitations specific to the

NDBCLUSTER storage engine:

Machine architecture. All machines used in the cluster must have the same architecture. That is, all machines hosting nodes must be either big-endian or little-endian, and you cannot use a mixture of both. For example, you cannot have a management node running on a PowerPC which directs a data node that is running on an x86 machine. This restriction does not apply to machines simply running mysql or other clients that may be accessing the cluster's SQL nodes.

Binary logging. MySQL Cluster has the following limitations or restrictions with regard to binary logging:

sql_log_binhas no effect on data operations; however, it is supported for schema operations.MySQL Cluster cannot produce a binary log for tables having

BLOBcolumns but no primary key.Only the following schema operations are logged in a cluster binary log which is not on the mysqld executing the statement:

See also Section 18.1.6.10, “Limitations Relating to Multiple MySQL Cluster Nodes”.

Disk Data object maximums and minimums. Disk data objects are subject to the following maximums and minimums:

Maximum number of tablespaces: 232 (4294967296)

Maximum number of data files per tablespace: 216 (65536)

Maximum data file size: The theoretical limit is 64G; however, the practical upper limit is 32G. This is equivalent to 32768 extents of 1M each.

Since a MySQL Cluster Disk Data table can use at most 1 tablespace, this means that the theoretical upper limit to the amount of data (in bytes) that can be stored on disk by a single

NDBtable is 32G * 65536 = 2251799813685248, or approximately 2 petabytes.The theoretical maximum number of extents per tablespace data file is 216 (65536); however, for practical purposes, the recommended maximum number of extents per data file is 215 (32768).

The minimum and maximum possible sizes of extents for tablespace data files are 32K and 2G, respectively. See Section 13.1.18, “CREATE TABLESPACE Syntax”, for more information.